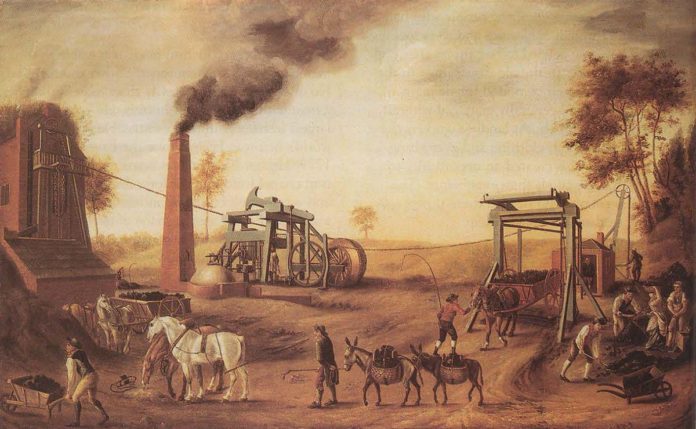

Dating back to the Industrial Revolution, people have speculated that machines would render human … [+]

Webnode

“When looms weave by themselves, man’s slavery will end.” —Aristotle, 4th century BC

Stanford is hosting an event next month named “Intelligence Augmentation: AI Empowering People to Solve Global Challenges.” This title is telling and typical.

The notion that, at its best, AI will augment rather than replace humans has become a pervasive and influential narrative in the field of artificial intelligence today.

It is a reassuring narrative. Unfortunately, it is also deeply misguided. If we are to effectively prepare ourselves for the impact that AI will have on society in the coming years, it is important for us to be more clear-eyed on this issue.

It is not hard to understand why people are receptive to a vision of the future in which AI’s primary impact is to augment human activity. At an elemental level, this vision leaves us humans in control, unchallenged at the top of the cognitive food chain. It requires no deep, uncomfortable reconceptualizations from us about our place in the world. AI is, according to this line of thinking, just one more tool we have cleverly created to make our lives easier, like the wheel or the internal combustion engine.

But AI is not just one more tool, and uncomfortable reconceptualizations are on the horizon for us.

Chess provides an illustrative example to start with. Machine first surpassed man in chess in 1997, when IBM’s Deep Blue computer program defeated world chess champion Garry Kasparov in a widely publicized match. In response, in the years that followed, the concept of “centaur chess” emerged to become a popular intellectual touchstone in discussions about AI.

The idea behind centaur chess was simple: while the best AI could now defeat the best human at chess, an AI and human working together (a “centaur”) would be the most powerful player of all, because man and machine would bring complementary skills to bear. It was an early version of the myth of augmentation.

And indeed, for a time, mixed AI/human teams were able to outperform AI programs at chess. “Centaur chess” was hailed as evidence of the irreplaceability of human creativity. As one centaur chess advocate reasoned: “Human grandmasters are good at long-term chess strategy, but poor at seeing ahead for millions of possible moves—while the reverse is true for chess-playing AIs. And because humans and AIs are strong on different dimensions, together, as a centaur, they can beat out solo humans and computers alike.”

But as the years have gone by, machine intelligence has continued on its inexorable exponential upward trajectory, leaving human chess players far behind.

Today, no one talks about centaur chess. AI is now so far superior to humanity in this domain that a human player would simply have nothing to add. No serious commentator today would argue that a human working together with DeepMind’s AlphaZero chess program would have an advantage over AlphaZero by itself. In the world of chess, the myth of augmentation has been proven untenable.

Chess is just a board game. What about real-world settings?

The myth of augmentation has spread far and wide in real-world contexts, too. One powerful reason why: job loss from automation is a frightening prospect and a political hot potato.

Let’s unpack that. Entrepreneurs, technologists, politicians and others have much to gain by believing—and by persuading others to believe—that AI will not replace but rather will supplement humans in the workforce. Employment is one of the most basic social and political necessities in every society in the world today. To be openly job-destroying is therefore a losing proposition for any technology or business.

“AI is going to bring humans and machines closer together,” business leader Robin Bordoli said recently, echoing a narrative that has been on the lips of countless Fortune 500 CEOs in recent years. “It’s not about machines replacing humans, but machines augmenting humans. Humans and machines have different relative strengths and weaknesses, and it’s about the combination of these two that will allow human intents and business process to scale 10x, 100x, and beyond that in the coming years.”

Former IBM CEO Gina Rometti summed it up even more succinctly in a 2018 Wall Street Journal op-ed: “AI—better understood as ‘augmented intelligence’—complements, rather than replaces, human cognition.”

Yet a moment’s honest reflection makes clear that many AI systems being built today will displace, not augment, vast swaths of human workers across the economy.

AI’s core promise—the reason we are pursuing it to begin with—is that it will be able to do things more accurately, more cheaply and more quickly than humans can do them today. Once AI can deliver on this promise, there will be no practical or economic justification for humans to continue to be involved in many fields.

For instance, once an AI system can provably drive a truck better and safer in all conditions than a human can—the technology is not there today, but it is getting closer—it simply will not make sense for humans to continue driving trucks. In fact, it would be affirmatively harmful and wasteful to have a human in the loop: aside from saved labor costs, AI systems never speed, never get distracted, never drive drunk, and can stay on the road 24 hours a day without getting drowsy.

The startups and truck manufacturers developing self-driving truck technology today may not acknowledge it publicly, but the end game of their R&D efforts is not to augment human laborers (although that narrative always finds a receptive audience). It is to replace them. That is where the real value lies.

Radiology provides another instructive example. Radiologists’ primary responsibility is to examine medical images for the presence or absence of particular features, like tumors. Pattern recognition and object detection in images is exactly what deep learning excels at.

A common refrain in the field of radiology these days goes like this: “AI will not replace radiologists, but radiologists who use AI will replace radiologists who do not.” This is a quintessential articulation of the myth of augmentation.

And in the near term, it will be true. AI systems will not replace humans overnight, in radiology or in any other field. Workflows, organizational systems, infrastructure and user preferences take time to change. The technology will not be perfect at first. So to start, AI will indeed be used to augment human radiologists: to provide a second opinion, for instance, or to sift through troves of images to prioritize those that merit human review. In fact, this is already happening. Consider it the “centaur chess” phase of radiology.

But fast forward five or ten years. Once it is established beyond dispute that neural networks are superior to human radiologists at classifying medical images—across patient populations, care settings, disease states—will it really make sense to continue employing human radiologists? Consider that AI systems will be able to review images instantly, at zero marginal cost, for patients anywhere in the world, and that these systems will never stop improving.

In time, the refrain quoted above will prove less on-the-mark than the controversial but prescient words of AI legend Geoff Hinton: “We should stop training radiologists now. If you work as a radiologist, you are like Wile E. Coyote in the cartoon; you’re already over the edge of the cliff, but you haven’t looked down.”

What does all of this mean for us, for humanity?

A vision of the future in which AI replaces rather than augments human activity has a cascade of profound implications. We will briefly surface a few here, acknowledging that entire books can and have been written on these topics.

To begin, there will be considerable human pain and dislocation from job loss. It will occur across social strata, geographies and industries. From security guards to accountants, from taxi drivers to lawyers, from cashiers to stock brokers, from court reporters to pathologists, human workers across the economy will find their skills out of demand and their roles obsolete as increasingly sophisticated AI systems come to perform these activities better, cheaper and faster than humans can. It is not Luddite to acknowledge this inevitability.

Society needs to be nimble and imaginative in its public policy response in order to mitigate the effects of this job displacement. Meaningful investment in retraining and reskilling by both governments and private employers will be important in order to postpone the obsolescence of human workers in an increasingly AI-driven economy.

More fundamentally, a paradigm shift in how society conceives of resource allocation will be necessary in a world in which material goods and services are increasingly cheaply available thanks to automation, while demand for compensated human labor is increasingly scarce.

The idea of a universal basic income—until recently, little more than a pet thought experiment among academics—has begun to be taken seriously by mainstream policymakers. Last year Spain’s national government launched the largest UBI program in history. One of the leading candidates in the 2020 U.S. presidential elections made UBI the centerpiece of his campaign. Expect universal basic income to become a normalized and increasingly important policy tool in the era of AI.

An important dimension of AI-driven job loss is that some roles will resist automation for far longer than others. The jobs in which humans will continue to outperform machines for the foreseeable future will not necessarily be those that are the most cognitively complex. Rather, they will be those in which our humanity itself plays an essential part.

Chief among these are roles that involve empathy, camaraderie, social interaction, the “human touch.” Human babysitters, nurses, therapists, schoolteachers, and social workers, for instance, will continue to find work for many years to come.

Likewise, humans will not be replaced any time soon in roles that require true originality and unconventional thinking. A cliché but insightful adage about the relationship between man and AI goes as follows: as AI gets better at knowing the right answers, humans’ most important role will be to know which questions to ask. Roles that demand this sort of imaginativeness include, for instance, academic researchers, entrepreneurs, technologists, artists, and novelists.

In the jobs that do remain as the years go by, then, people will spend less of their energy on tedious, repeatable, soulless tasks and more of it developing human relationships, managing interpersonal dynamics, thinking creatively.

But make no mistake: a larger, more profound transition is in store for humanity as AI assumes more and more of the responsibilities that people bear today. To put it simply, we will eventually enter a post-work world.

There will not be nearly enough meaningful jobs to employ every working-age person. More radically, we will not need people to work in order to generate the material wealth necessary for everyone’s healthy subsistence. AI will usher in an era of bounty. It will automate (and dramatically improve upon) the value-creating activities that humans today perform; it will, for instance, enable us to synthetically generate food, shelter, and medicine at scale and at low cost.

This is a startling, almost incomprehensible vision of the future. It will require us to reconceptualize what we value and what the meaning of our lives is.

Today, adult life is largely defined by what resources we have and by how we go about accumulating those resources—in other words, by work and money. If we relax these constraints, what will fill our lives?

No one knows what this future will look like, but here are some possible answers. More leisure time. More time to invest in family and to develop meaningful human relationships. More time for hobbies that give us joy, whether reading or fly fishing or photography. More mental space to be creative and productive for its own sake: in art, writing, music, filmmaking, journalism. More time to pursue our inborn curiosity about the world and to deepen our understanding of life’s great mysteries, from the atom to the universe. More capacity for the basic human impulse to explore: the earth, the seas, the stars.

The AI-driven transition to a post-work world will take many decades. It will be disruptive and painful. It will require us to completely reinvent our society and ourselves. But ultimately, it can and should be the greatest thing that has ever happened to humanity.

Credit: Source link